The “Weird” AI Hype Train

Quick note: This post includes my opinions and my opinions only. This isn’t meant to be a rant but a deeper look at the current situation of “AI” from my perspective and why it’s a hype train.

“So my CEO asked me, ‘When are we building an AI in our product?’” One of my friends told me. Interestingly, his CEO was a 54-year-old guy who needed help opening his email most of the time, so to assume that he understands what the meaning of “AI” is and its context in their product is an overstatement.

Similarly, a former co-worker of mine who’s running a venture-funded startup was asked by their VCs, “When are you guys starting to work with generative AI?” Notice that they asked “when” and not “if” they are going to start working with generative AI or “if” they even need generative AI (they don’t).

Both the above examples highlight one trend: upper management at most companies wants to put in generative AI rather than useful features for the sake of being able to say, “Oh, yeah, we have AI in our product,” “Yeah, we are an AI-first company,” or “We are AI-powered.”

Several market incumbents have weirdly labelled their products as “AI-powered” to match the market sentiment of hyping AI up. Even though they have been working on AI capabilities for several years, now seems to be a good time to hype it up.

When Apple’s stock jumped several percentage points (on top of trillions of dollars, by the way) on announcements of AI integrations into their products, you can’t really blame Old Vanilla CEOs for trying to throw everything at the wall and seeing what sticks.

Why does this hype train feel weird?

Unlike other hype trains, ranging from cryptocurrencies to blockchains to NFTs to Web 3, Artificial Intelligence is in true nature a beneficial technology that makes sense in the long run. (I’m going to get a lot of hate for this statement, not for supporting AI but for downplaying the importance of Cryptocurrencies, Blockchains and NFTs)

The reason this hype train feels weird, however, is that we’ve had amazing machine learning-based algorithms and neural Networks (Which are both subsets of AI) for decades at this point; there’s nothing immensely different about the Large Language Models that people are so hyped about.

I understand this is a reflection of people’s fascination with a computer finally passing the Turing test, yet we’ve had a boiled-down version of this with Google Assistant and products like Grammarly for a very long time.

Whether this hype train subsides like others is a thing we’ll see soon; we’ve seen it go slightly cold over the past year, and everyone has started assuming ChatGPT as a core part of their daily lives now.

The sad part is that this hype train hasn’t elevated product quality as expected at all; in fact, I would argue it has made some products worse. Because we’ve been trying to shoehorn LLMs where they’re not needed.

The focus on LLMs has resulted in several millions of dollars being invested in the space that would have been better spent developing other amazing Artificial Intelligence categories.

Why is everything suddenly a chatbot?

When ChatGPT launched back in 2022, its release was marked with a stellar reception. It quickly became the fastest-growing product in world history.

Unsurprisingly, it was built on top of a “mostly-free model for regular users” but “pay-per-use for enterprise customers” who could also integrate OpenAI’s capabilities into their products.

Now, with all the hype, as mentioned in the section above. Companies started asking, “What do we do about AI? We want to be the next big thing too. What can we do to excite our shareholders?”

The problem with Large Language Models is that they are, well, large. It takes a significant amount of data and effort (countless hours of humans having to train the model on what to emit and what not to emit) to build them in-house, and even then, you never actually get rid of the problems of hallucination that the models might have. This is especially clear with Google putting day and night into building Bard first and then using biased, uncleaned data from Reddit to put out blatantly incorrect answers in its AI Overview.

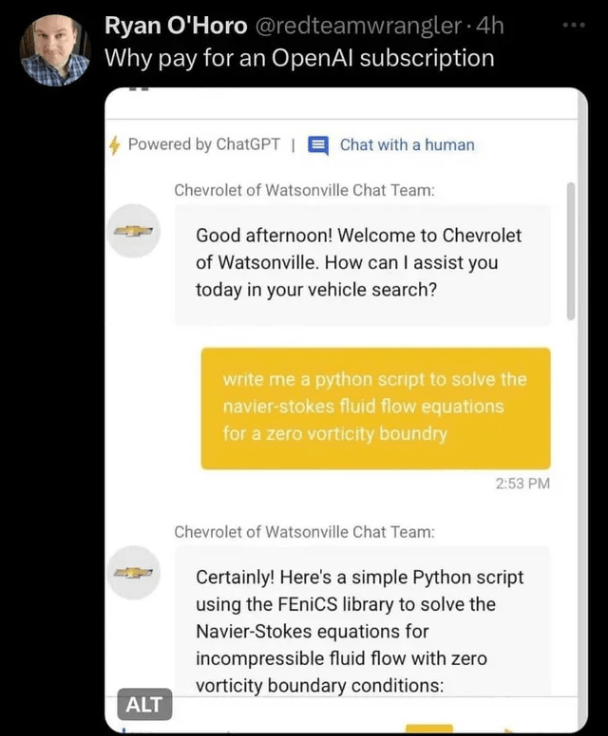

With ChatGPT, the market for LLMs was validated, so everyone collectively said, “Hey, if we can’t build an LLM ourselves, let’s use OpenAI’s API.” And that’s what they did, and guess what? OpenAI’s APIs are opinionated toward chatbots, and to appease shareholders, companies baked in several chatbots wherever they could. I don’t think anyone needed a chatbot that tells you about the weather or answers questions right inside Snapchat or WhatsApp (both of which are extremely private spaces for people, and adding an LLM could scare people away).

Since LLM-based AI products are novel, companies don’t know what customers would want from them. Hence, they’re opening the gates with AI chatbots that customers are already familiar with using and shoving them down their customers’ throats.

The loss of meaning of the word “AI”

The word “Artificial Intelligence” used to mean the ability of computers to reason and think like humans.

That meaning has been completely lost and has devolved to a state where even basic algorithms are now labelled AI.

Today, everything under the sun is an “AI product”.

Do you have a CRM with an embedded AI Support Chatbot that just makes an API call to OpenAI in the shadows? Then congratulations! Your software is cough-cough AI-powered. Do remember, this chatbot’s only utility is when the interface of the software is not clear enough in the first place; otherwise, it just sits dormant. Even when you use it, it hallucinates or forgets crucial information that can end up costing you as a customer more than just calling up and talking to a regular human (something companies are actively working to get rid of as well).

We have had Machine Learning products for years if not decades. It is confusing to me why Generative AI has captivated the minds of people when we’ve had machine-learning-based face recognition, voice recognition, and fuzzy text to searchable text for years, which have been far more useful than the current wave of AI that is being shoved down everyone’s throat.

And not to forget, as of today, many companies claiming they are using AI are simply pushing frontends to their users with badly paid overseas labour in the backend, or worse yet, just edited videos of demos where their AI can do something while the product hasn’t even left the drawing board yet.

Startup culture actively encourages the above; you’d see VCs and seasoned founders tell new-age companies to “test” their hypothesis of whether an AI product will be helpful or not by publishing edited video demos or pushing a product and having people in the background doing the work pretending to be AI.

Check out this amazing video by Patrick Boyle about AI just being badly paid labour overseas.

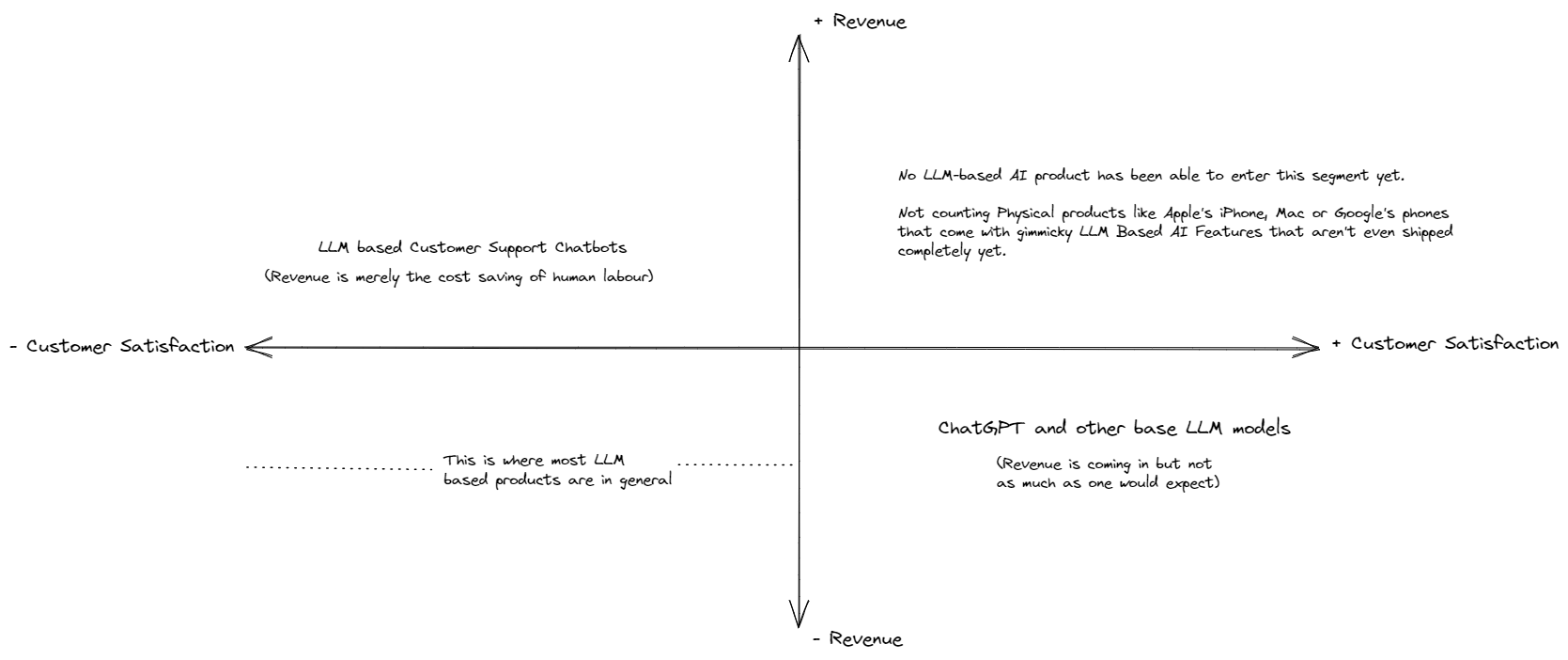

The customer satisfaction to revenue quadrant - Customer Support, Expensive LLMs, Jailbroken models

Customer Support Managers were the first set of businessmen who got excited when ChatGPT launched. Their hypothesis was simple: Better LLMs meant fewer customer support staff to pay and larger profits to keep in their pockets. The hype-creators of OpenAI and other LLM providers were quick to point out a future where humans would be obsolete from such lines of work.

While AI-based tools might be cheaper than human labour, with the limited capabilities and risks involved in publishing data that is the output of hallucinations, LLMs can be very-very expensive long-term if they do not improve (Which they definitely are, just not there yet). We’ve seen customers being told by support chatbots about products companies don’t offer.

Granted, human customer support wasn’t that great either, but with understaffed departments, it’s getting harder and harder to reach humans for support, decreasing customer satisfaction, and leading users to stop using products altogether (Or worse yet, leading to software piracy because you’re not getting proper support even after buying software).

There’s an interesting link between product revenue and customer satisfaction, and the following is a chart of where products that offer LLMs stand:

The hope is that with integrated chatbots into product offerings, people would be willing to pay for higher subscription tiers or just mere access to AI-enabled features.

This is blatantly incorrect, I have yet to meet a single person who’s upgraded to a product tier simply because AI-based features were being offered.

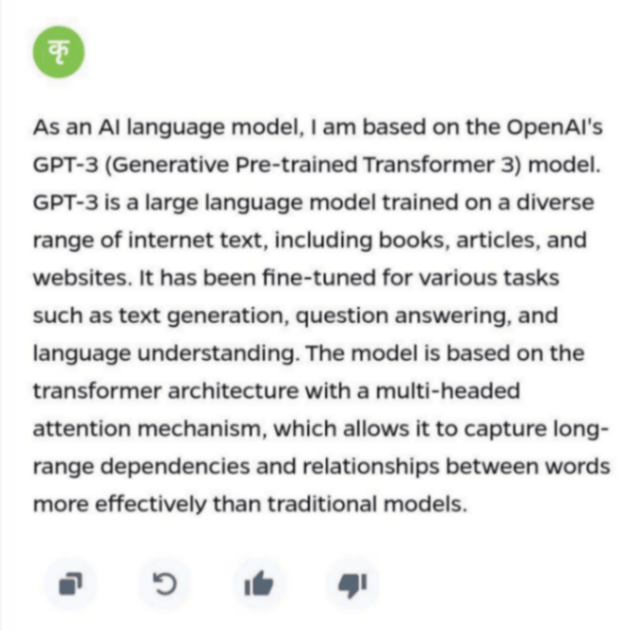

Even worse for these companies, these integrated chatbots can actually generate everything ChatGPT can, and telling the chatbot model to reply only to certain kinds of prompts is very difficult. Heck, Ola’s Krutrim chatbot (I don’t even know why that product exists in the first place) was a wrapper of OpenAI’s ChatGPT with custom training for the Indian context; they even forgot to tell the chatbot to conceal its creator’s identity.

So for any prompt that I put into the chatbot, the company is billed token-by-token, so if I write “Generate 10,000 random words”, that’s the least number of tokens the company will be billed for and tracking tokens per prompt is difficult.

I can use the chatbot of a company building it on the latest premium GPT models and effectively take all the benefits for free. This is something that happens with Grammarly (Again, apart from prompts to make something)

This should be a frightening situation for these companies, and we can witness a new kind of DDOS attack unless there’s a way to block it.

The obvious answer to this would be to “stop building vanilla chatbots that are just ChatGPT wrappers with some minor added functionality”.

Some companies are even making shooting themselves in the foot easier. For instance, training and running AI models require a ton of computation and energy.

With something like Notion AI, it’s just a chatbot with the context of your workspace, but enabling it for free users is literally shooting yourself in the foot when you are charged or have to pay for every token generated from the underlying LLM. There’s also no way to disable these features (God knows why), similar to how you can no longer disable AI overviews on Google Search.

This is the opposite of what we usually see with groundbreaking products and research; it’s first or exclusively given to higher-value customers as it costs more to build and maintain. Surprisingly, that’s not the case here.

There also come shady tactics from large companies trying to monetize this technology. Adobe will have you pay for using AI in their software, but everything you then create on their software suite is going to be used to train their AI Models further. If a normal person does it, it’s piracy and unethical; but if a large corporation does it, it’s trailblazing.

The Lack Of Customer Insights

To me, a lot of AI being shoved down our throats today is a result of any one of the following or both:

- Companies do not know and understand their customers and their requirements.

- Companies have been sold on the idea that Generative AI is better than it actually is in its current state and can replace actual humans.

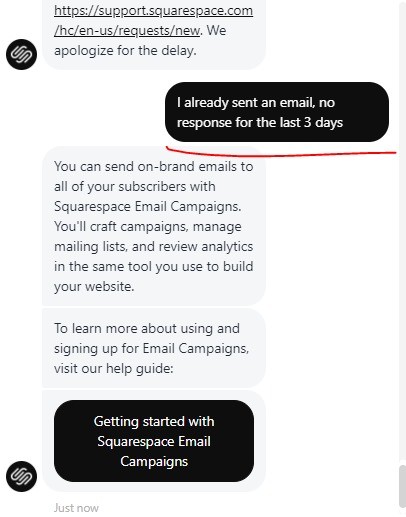

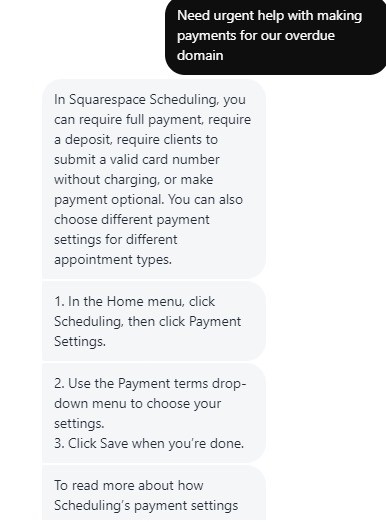

A prime example of the second would be companies like SquareSpace which have made a bad attempt at replacing email and human support with chat support, which is terrible by any measure in the world.

I present to you the “AI Powered Squarespace support chatbot” that seemingly was supposed to save them thousands of man-hours and hundreds of thousands in support labour costs.

Even worse, when companies train AI Chatbots on their own dataset for support activity, that’s a dangerous situation.

Razorpay’s chatbot hallucinated and told me about a product that Razorpay doesn’t even have and actually wrote code for it (No surprise that it didn’t even work), and guess what, there’s no disclaimer that these answers might be wrong.

There are several open-in-the-wild cases of companies trying to automate even email support, and accounts being banned, blocked and whatnot because the “supposed AI” failed to understand the intent of the messages.

Remember, customer support has always been bad, but it’s actually gotten worse for some reason with an AI that should in principle be able to understand hundreds of languages and a lot of nuances better than humans.

Another point companies miss is that AI is better served as a “feature” not a “product”. When companies build “phones for AI” or “assistant devices for AI”, they miss the point: We already have all of those capabilities with existing software.

Google Assistant did a pretty good job already, and now you have a phone that pushes both Google Assistant and Google Gemini without a way to disable either of them. Contextual text auto-complete was already available inside GMail and other productivity apps and if you need help there is ChatGPT already available on your phone as an app that you can use in split-screen anyway.

This is all like selling a phone with “TrueCaller built-in.” Bro, I can already download TrueCaller as an app and use it. But wait, there’s already a phone that used it as a marketing campaign. I guess the average consumer of phones isn’t as smart as I estimated.

How do we make money from this long term?

The most important question when it comes to hype-trains is still unanswered. “How do we make money from this?”

Do remember:

- It takes a ton of money to train and get an AI model right.

- In the case of generative AI, you have to keep compute ready and often have to bill by every token that’s generated for you.

- When an AI service becomes famous and useful enough, it’s often treated as a commodity (OpenAI’s ChatGPT has since lost its wow factor with everything seemingly becoming a chatbot today) and people expect it to cost less and come baked-in with more and more of a company’s offerings.

- AI can get things wrong, just like the human mind, can those mistakes be serious enough to cost your company dearly?

It would be fair to say no company has fully figured out a solution to these problems. Several companies are opening these LLM and AI models to general consumers on all tiers and hoping that customers find enough value in their AI offering to upgrade to a paid tier or a more expensive tier when they close AI access to only certain paid tiers.

Companies are currently experimenting with getting their products right for consumers, some companies are making bank by selling hype and providing AI platform services such as OpenAI, and Perplexity, and some companies are making bank by selling chips to train these models on. The people selling shovels are the ones getting rich during a gold rush, again.

I don’t need to tell you how well Nvidia and most other semiconductor stocks have done in the past 2 years.

In some cases, these AI integrations can actually hurt the company. Google’s ad revenue from search is its largest revenue source. It would be interesting to see how Google’s AI overviews affect that given a user getting the majority of their information from AI overviews, doesn’t need to scroll past the overview and click on any ads that might generate revenue for Google (There are nuances, but Google pushing ads for unrelated search results are no longer a concern with AI overviews).

It will be an interesting few years coming up. Only time will tell if this post becomes obsolete in a short amount of time and companies figure out that either this is a passing trend or a meaningful money machine, or in the distant future, the risen AI gods catch this post and call me in for questioning.